Optimize your data integration strategy with Azure Data Factory to enhance workflows, improve decision-making, and tackle common challenges efficiently.

In today’s business landscape, data integration has become a cornerstone strategy, enabling seamless execution of business operations.

The increasing complexity of corporate environments, fueled by a surge in data generated from diverse sources, presents significant challenges for IT teams.

Without a strong data integration strategy, organizations may face issues such as standardization gaps and inefficient data management, ultimately leading to operational inefficiencies.

Data Integration with Azure Data Factory - Download Documentation

Any data integration strategy involves a complex process that must be meticulously planned and organized to ensure it operates smoothly.

In the context of Azure Data Factory, data integration with ADF often presents certain challenges that can negatively impact business performance.

Addressing and avoiding these obstacles requires a robust approach to data integration. Discover how to overcome the most common data integration challenges with Azure Data Factory.

Ebook: Data Integration with Azure Data Factory

In this ebook you will find the keys to create a framework that simplifies and streamlines data integration processes with Azure Data Factory (ADF).

Data Integration as a Driver of Business Success

Data integration goes beyond mere information consolidation; it’s a vital component for organizations aiming not only to manage their information but also to derive strategic value from it.

Rather than simply centralizing all relevant data in one place, data integration is part of a broader process that enables interoperability and the fusion of disparate systems, allowing experts to analyze data in a unified way. This capability is essential for companies to make effective, data-driven decisions.

Far from being a mere technical issue, data integration is a strategic investment that empowers companies to optimize workflows, enhance data-based decision-making, and stay competitive in an increasingly information-driven world.

Data Integration with Azure Data Factory

Azure Data Factory (ADF) is one of the most widely adopted data integration platforms for companies of all sizes due to its capability to orchestrate complex ETL (extract, transform, load) and ELT (extract, load, transform) workflows. Known for its flexibility and scalability, ADF has consistently been positioned as a leading tool in the industry, as evidenced by its continual placement in Gartner's leader quadrant for integration platforms.

What is Azure Data Factory?

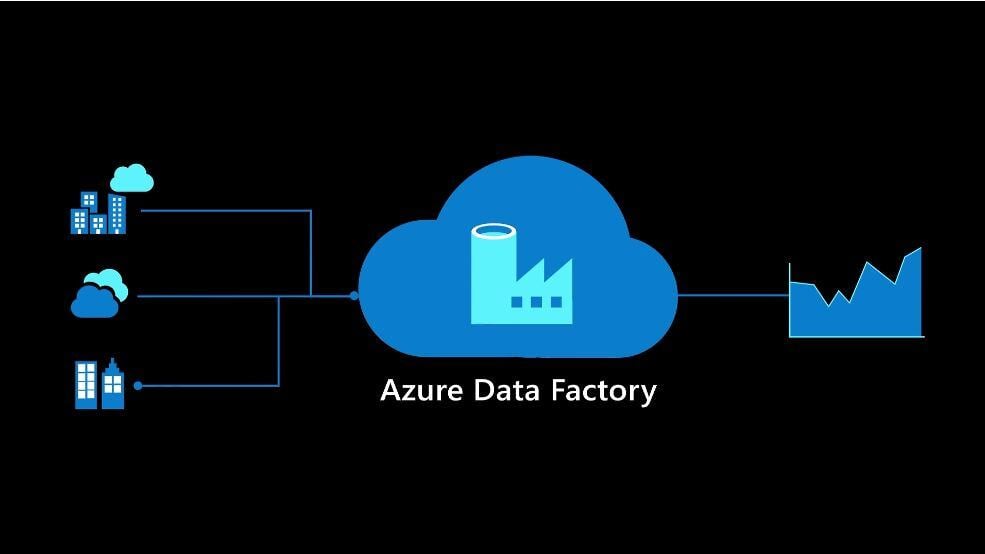

Azure Data Factory (ADF) is a cloud-based data integration service developed by Microsoft. It is designed to streamline the ingestion, transformation, and movement of data between disparate systems, whether on-premises or in the cloud.

ADF enables the creation of automated workflows to manage the transportation and processing of large data volumes, orchestrating diverse data sources within a single environment.

In simpler terms, Azure Data Factory acts as an “orchestra conductor” connecting various databases, files, and data services, allowing for the seamless movement, transformation, and loading of data as needed. It’s particularly useful for automating tasks such as copying data between servers, cleaning or transforming data for analysis, or transferring large data volumes into data warehouses like Azure SQL or Azure Data Lake.

ADF’s primary goal is to simplify data integration work, enabling both technical and non-technical teams to build data pipelines with a visual drag-and-drop interface, eliminating the need for complex code.

Ideal for businesses handling extensive data, Azure Data Factory offers a scalable, secure, and efficient solution for consolidating and transforming data, maximizing its value for analysis and business decision-making

What Does Azure Data Factory Do?

Azure Data Factory stands out for its native integration capabilities with over 90 connectors, allowing it to unify data from multiple sources, including enterprise databases, data warehouses, SaaS applications, and the entire Azure ecosystem.

This flexibility is essential for businesses looking to integrate and transform large-scale data efficiently.

However, despite its advanced capabilities, using Azure Data Factory is not without challenges. Common hurdles include managing complex workflows, optimizing data pipelines for peak performance, and ensuring precise configuration to prevent operational bottlenecks.

If not addressed properly, these issues can impact the efficiency and performance of integration processes, ultimately affecting the organization’s overall functionality.

Common Data Integration Challenges with Azure Data Factory

Working with Azure Data Factory to integrate data efficiently requires a well-structured approach and clear planning. Without a solid strategy, it’s easy to fall into common pitfalls that may hinder project success.

Guide: Data Integration with Azure Data Factory – Download Documentation

Learn to resolve common issues in data integration with Azure Data Factory. This guide walks you through designing a framework to make data integration processes with Azure Data Factory (ADF) more efficient and agile.

Ebook: Data Integration with Azure Data Factory

In this ebook you will find the keys to create a framework that simplifies and streamlines data integration processes with Azure Data Factory (ADF).

Here, we outline some of the most typical challenges encountered in data integration using Azure Data Factory:

-

Isolated Project Development

Data integration projects are often developed in isolation, resulting in fragmented solutions. Without a standardized approach, inconsistencies arise that make future integration between systems and processes more difficult, increasing the risk of errors and operational failures.

-

Disorganization and Logic Duplication

Inconsistent workflow design often leads to unnecessary duplication of processes, such as data loading, which not only consumes extra resources but also increases the likelihood of operational errors. Without careful design, the environment becomes more complex and harder to manage.

-

Onboarding Challenges for New Users

A disorganized integration environment can be extremely challenging for new team members. Lack of adequate documentation or clear structure slows new users’ adoption, prolonging the time needed to familiarize themselves and operate the solutions effectively.

-

Redundant Components

Without a clear strategy for pipeline design, duplications of components like “linked services” or “datasets” are common. This redundancy complicates maintenance, creates confusion, and unnecessarily raises operational costs.

-

Elevated Costs

Inadequate planning often leads to redundant processes and components, which in turn means higher resource usage. This translates to operational overcosts that could have been avoided with more efficient resource management.

-

Slow Processes and Lack of Optimization

Sequential execution or incorrect use of parallelism in data flows can slow processes and reduce efficiency. Without proper optimization, businesses may experience delays in data delivery, negatively impacting operational performance.

-

Deficient Data Governance

Without a solid data governance framework, difficulties in identifying and correcting errors in data loading processes are likely to arise. This impacts data quality and availability, compromising responsiveness to incidents.

-

Lack of Centralized Control

The absence of centralized process management limits visibility over data flow and its status, making it harder to identify and quickly resolve issues. This can lead to operational delays and impact decision-making.

-

Disconnect Between Technical and Business Needs

Frequently, technical solutions fail to align with the business's functional objectives. This lack of communication between technical and business teams can lead to solutions that do not deliver the expected value, limiting their effectiveness and return on investment

Ebook: Data Integration with Azure Data Factory

In this ebook you will find the keys to create a framework that simplifies and streamlines data integration processes with Azure Data Factory (ADF).

Conclusion

Data integration with Azure Data Factory is not just a technical necessity; it is an essential strategy for enhancing business efficiency and competitiveness. Through a well-structured approach that anticipates potential challenges, organizations can optimize their integration processes, reduce operational costs, and improve data-driven decision-making. Adopting strong practices and maintaining consistent alignment between technical and business needs will ensure that data integration becomes a vital driver of organizational success.