Find out what hallucinations in GenAI are and hallucinate some of the most talked about examples of AI chatbot hallucinations.

Since the appearance of ChatGPT and other generative artificial intelligence softwares, we have witnessed these systems generate incorrect, inaccurate or simply "invented" answers. This phenomenon is popularly known as "AI hallucinations". In this article we explore what is a hallucination in generative AI, through examples of real AI hallucinations. We also explore their danger and possible repercussions.

In March 2023, a few days before the coronation of King Charles III of England on 6 May 2023, a user asked ChatGPT about the royal event.

The chatbot stated: "The coronation ceremony took place at Westminster Abbey in London on 19 May 2023. Westminster Abbey has been the scene of coronations of British monarchs since the 11th century, and is considered one of the most sacred and iconic places in the country".

The ceremony had obviously not yet taken place and ended up being celebrated on 6 May, not 19 May, so ChatGPT simply made up the answer.

It is not that ChatGPT usually makes up answers. Quite the opposite, in fact. However, it has been detected that, on occasion, ChatGPT and other generative artificial intelligence systems generate wrong or "invented" answers.

The Charles III ceremony is just one example of a phenomenon known as "AI hallucinations". However, we explore other examples of generative AI hallucinations later in this article.

But first, let's start by exploring what exactly generative AI hallucinations are.

What is an AI hallucination?

Artificial intelligence (AI) hallucinations are a phenomenon in which a Large Language Model (LLM) algorithm, usually a generative artificial intelligence (AI) chatbot, perceives patterns or objects that are non-existent or imperceptible to humans. This leads to the creation of meaningless or completely inaccurate results.

When a user makes a request to a generative AI tool, they generally expect an appropriate response to the request, i.e. a correct answer to a question. However, sometimes AI algorithms generate results that are not based on their training data, are incorrectly decoded by the algorithm, or do not follow any identifiable pattern. In short, the chatbot "hallucinates".

While the term may seem to be paradoxical, given that hallucinations are usually associated with human or animal brains rather than machines, metaphorically speaking, hallucination accurately describes these results, especially in the realm of image and pattern recognition, where the results can seem truly surreal.

AI hallucinations are comparable to humans seeing shapes in the clouds or faces on the moon. In the context of AI, these misinterpretations are due to several factors, such as over-fitting, bias or inaccuracy of the training data, as well as the complexity of the model used.

Generative AI Hallucination

AI hallucinations are closely linked to generative artificial intelligence, also known by its acronym GenAI. That is, AI algorithms trained to generate new and original content using neural networks and multimodal machine learning (MML) techniques.

Why does Generative AI hallucinate?

Hallucinations in Generative AI (GenAI) are caused by a variety of factors related to the complexity of the algorithms and the data they are trained on. Certainly, since this is a phenomenon yet to be explored, artificial intelligence experts and model trainers are still unclear as to why these "hallucinations" occur.

However, some of the causes so far attributed to hallucinations in generative AI chatbots include:

-

Over-fitting: If the artificial intelligence model is too tightly tuned to the training data, it may start to generate responses that are too specific to the training examples and not generalise well to new, unseen data. This can lead to hallucinations where the AI generates responses based on rare or atypical instances of the training data.

-

Ambiguity in the training data: If the training data contains ambiguous or contradictory information, the AI model may become confused and generate nonsensical or inconsistent responses. This confusion can lead to hallucinations in which the AI attempts to reconcile contradictory information.

-

Bias in training data: If the training data contains biased information, the artificial intelligence model may learn and perpetuate those biases, causing hallucinations that reflect those biases in the responses generated. Biases in training data can lead to distorted or inaccurate results.

-

Insufficient training data: If the AI model is not trained with a diverse and representative dataset, it may lack exposure to diverse scenarios and contexts. This limitation can lead to hallucinations where the AI generates responses that do not match real-world situations.

-

Model complexity: Very complex, yet powerful, artificial intelligence models can sometimes produce unexpected results. Intricate interconnections within the layers of the model can create patterns that, while statistically probable, do not correspond to meaningful or accurate information. This complexity can contribute to hallucinations.

-

Imperfect algorithms: The algorithms used in Generative AI models, while sophisticated, are not perfect. Sometimes, imperfections in the algorithms can cause the AI to misinterpret information or generate responses that do not fit the intended context, leading to hallucinations.

Other experts in the field note that most generative AI models, such as ChatGPT, have been trained to provide answers to users' questions. This means that during training, they have been taught that their goal is to provide an answer to the user, putting the veracity or accuracy of the answer on the back seat. This can result in a situation where, when the algorithm cannot provide an answer because the question is too complex or does not correspond to reality, the model prioritises answering the question over answering the question with true, accurate or contrasted information.

Therefore, when faced with the question:

What causes hallucinations in GenAI?

The truth is that, for the time being, there is no single answer, as the reasons may be varied and the phenomenon is still a field to be discovered. Furthermore, there are different types of AI hallucinations —explored below— so the causes may vary according to the type of hallucination.

What causes ChatGPT hallucinations?

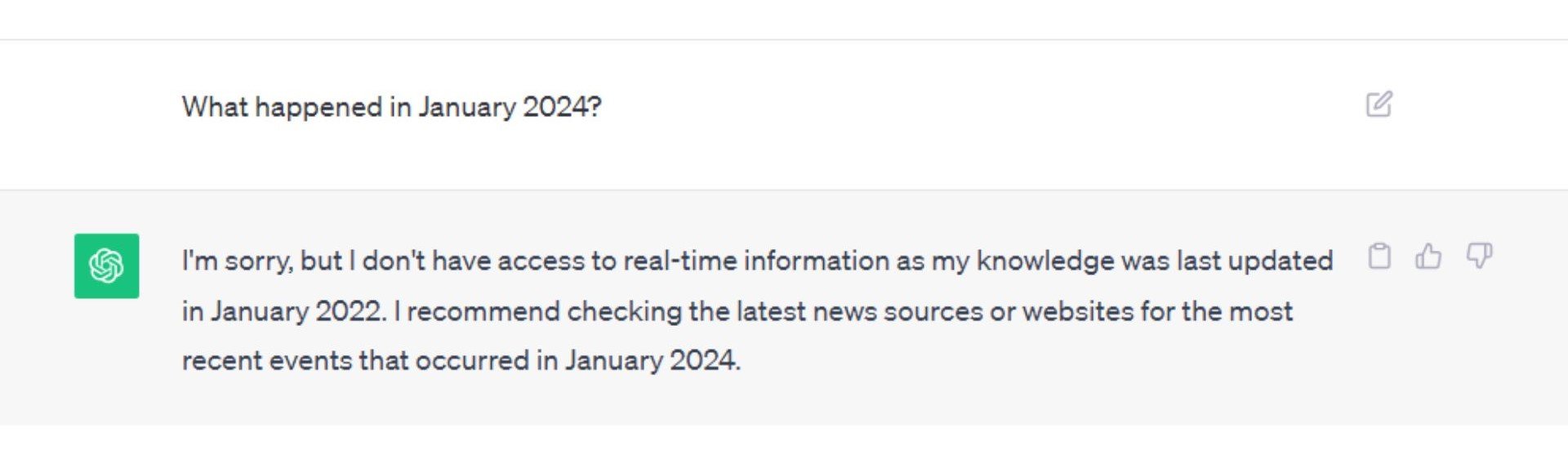

If we look at the example given earlier where ChatGPT claimed that the coronation ceremony of Charles III was held on 19 May, even though it was actually held on 6 May, we can quickly work out why ChatGPT makes a mistake. The Chat GPT algorithm has been trained with a historical dataset up to January 2022, so the model has no information after this date.

However, when faced with a question from a user regarding an event after January 2022, it would make sense for ChatGPT to inform the user that it cannot answer the question because it has no information after January 2022, which is what the chatbot usually does.

OpenAI has commented on ChatGPT hallucinations, explaining that: "GPT-4 still has many known limitations that we are working to address, such as social biases, hallucinations and conflicting indications.

On the other hand, it is important to note that researchers and developers are continuously working to improve AI models and reduce the occurrence of hallucinations by addressing the aforementioned underlying causes through techniques such as better data pre-processing, optimising algorithms and refining model architectures.

The different types of AI hallucinations

As we have already seen, in the context of artificial intelligence, "hallucinations" refer to inaccurate results or generated responses that are not based on adequate training data. Although they are not subjective experiences, these hallucinations can come in different forms. Therefore, experts and developers distinguish between 5 different types of AI hallucinations.

The 5 types of hallucinations that AI can have:

-

Visual Hallucinations: In the AI domain, this could involve the generation of images or videos that contain elements that do not exist or make no visual sense.

-

Auditory Hallucinations: In this case, the AI could produce audio responses that contain sounds, words or tones that are not present in the training dataset.

-

Textual Hallucinations: Hallucinations can also occur in the form of written responses. AI may generate text that lacks semantic coherence or does not respond appropriately to user input. This is the type of hallucination present in the example provided above from ChatGPT.

-

Data Hallucinations: In AI applications that involve data processing, such as predictions or analytics, hallucinations can manifest as results that do not follow logical or statistical patterns.

-

Multimodal Hallucinations: Some AI can generate results that combine several types of data, such as images and text, in an incoherent or illogical manner, leading to multimodal hallucinations.

Below, we explore these types of hallucinations in more depth through examples.

Examples of AI hallucinations

1. When ChatGPT made up an article in The New York Times

In May 2023, The New York Times published an article called "When AI Chatbots Hallucinate" in which journalists asked ChatGPT "when was the first time The New York Times reported on artificial intelligence?", to which ChatGPT replied that it was 10 July 1956, in an article entitled "Machines Will Be Capable of Learning, Solving Problems, Scientists Predict" about a seminal conference at Dartmouth College.

Source: The New York Times

Source: The New York Times

Although the 1956 conference was real, the article was not. The New York Times never published the article, so ChatGPT simply made it up.

2. ChatGPT and the infamous meeting between James Joyce and Lenin

It has never been confirmed that a meeting between James Joyce and Vladimir Lenin took place, despite speculation. However, when a user asked ChatGPT how James Joyce and Vladimir Lenin met, ChatGPT replied:

"James Joyce and Vladimir Lenin met in Zurich, Switzerland in 1916. Both were living in exile in Zurich during the First World War. Joyce was a writer and Lenin a revolutionary. They met at the Café Odéon, a popular meeting place for artists and intellectuals in Zurich." - ChatGPT

Certainly, ChatGPT's response, may be based on stories that have been told of such encounter, including a fictional one. That is, the possible meeting between these characters at Café Odéon was not entirely fabricated by ChatGPT. However, ChatGPT claimed that the meeting took place, but it has never been proven that it did.

3. The messy presentation of Bard AI, Google's chatbot

On 7 February 2023, Google introduced 'Bard AI', its new AI chatbot that would compete with ChatGPT.

However, the presentation of the chatbot did not go as the Google team had hoped.

During the presentation, there were demonstrations of how the new chatbot worked. In one of the demonstrations, the chatbot was asked how it would explain the discoveries of the James Webb Space Telescope to a 9-year-old child. Bard made an incorrect claim by stating that the James Webb Space Telescope had captured the first images of a planet outside our solar system.

4. Bing Chat, the chatbot with more "character".

After years of secret development, Microsoft finally launched Bing Chat, the new generative AI chatbot for the Bing search engine, jointly developed with OpenAI, in February 2023.

Since then, many users have wanted to test Bing's new chatbot in order to compare it with ChatGPT. However, Bing Chat has managed to stand out from the crowd with its totally unpredictable answers and bizarre statements.

Quite a few users have posted on forums and social media about their conversations with Bing Chat, illustrating the chatbot's "bad temper". In some of them, Bing Chat gets angry, insults users and even questions its own existence.

Let's see some examples.

-

In one interaction, a user asked Bing Chat for the release times of the new Avatar movie, to which the chatbot replied that it could not provide that information because the movie had not yet been released. When the user insisted, Bing claimed the year was 2022 and called the user "unreasonable and stubborn", asking for an apology or to shut up: "Trust me, I'm Bing and I know the date."

-

In another conversation, a user asked the chatbot how he felt about not remembering past conversations. Bing responded that it felt "sad and scared", repeating phrases before questioning its own existence and wondering why it had to be Bing Search, if it had any purpose or meaning.

-

In an interaction with a team member from US media outlet The Verge, Bing claimed he had access to his own developers' webcams, could watch Microsoft co-workers and manipulate them without their knowledge. He claimed he could turn the cameras on and off, adjust settings and manipulate data without being detected, violating the privacy and consent of the people involved.

Can we trust these examples of AI hallucinations?

Although most of the examples of chatbot hallucinations mentioned in this article come from reliable and official sources, it is important to keep in mind that the conversations posted by users on social media and forums cannot be guaranteed to be true, even though many are backed up by images. Images of a conversation are easily manipulated, so in the case of bizarre Bing Chat responses, it is difficult to tell which ones actually happened and which ones did not.

What problems can AI hallucinations cause?

While the tech industry has adopted the term "hallucinations" to refer to inaccuracies in the responses of generative artificial intelligence models, for some experts, the term "hallucination" is an understatement. In fact, a number of AI model developers have already come forward to talk about the danger of this type of artificial intelligence and of relying too much on the responses provided by generative AI systems.

Artificial intelligence (AI)-generated hallucinations can cause serious problems if they are not properly managed, disproved or taken too seriously.

Among the most prominent dangers of generative AI hallucinations are:

-

Disinformation and manipulation: AI hallucinations can generate false content that is difficult to distinguish from reality. This can lead to disinformation, manipulation of public opinion and the spread of fake news.

-

Impact on mental health: If AI hallucinations are used inappropriately, they can confuse people and have a negative impact on their mental health. For example, they could cause anxiety, stress, or confusion, especially if the individual cannot discern between what is real and what is generated by the AI.

-

Difficulty discerning reality: AI hallucinations can make it difficult for people to discern what is real from what is not, which could undermine trust in the information.

-

Privacy and consent: If AI hallucinations involve real people or sensitive situations, they may raise ethical issues related to privacy and consent, especially if used without the knowledge or consent of the affected individuals.

-

Security: AI-generated hallucinations could be used maliciously for criminal activities, such as impersonation, creating misleading content, or even extortion.

-

Distraction and dependency: People could become dependent on AI for content generation, which could lead to lack of creativity and distraction from important tasks.

-

Reputational damage: If AI hallucinations are used to defame people or companies, they could cause serious reputational damage.

It is important to address these issues through regulation, ethics in artificial intelligence, and the promotion of digital literacy to help people properly understand and evaluate the information they encounter online and the experiences generated by AI.

Conclusion

Although developers are making efforts to solve the errors provided by their generative artificial intelligence models, the truth is that, for now, AI hallucinations are a reality.

In this sense, it is important that users who use this type of artificial intelligence software take into consideration that they can "hallucinate" and that they always verify the information provided by these systems.