Discover how metadata drives modern data integration: traceability, quality, automation and data governance in complex environments.

In today’s data governance and analytics ecosystems, there’s a foundational element that often goes unnoticed, but holds everything together: metadata.

When applied to data integration, metadata provides critical context: it reveals where data originates, how it’s transformed, which rules are applied, and who interacts with it. In short, it’s the backbone of any reliable and scalable integration process.

For years, metadata was treated as a secondary concern, merely documentation for developers. But in a world where data flows at the speed of business, metadata has become a strategic asset. It’s essential for ensuring data traceability, quality, and operational efficiency.

Understanding the role of metadata in data integration is no longer just a technical requirement, it’s a prerequisite for making informed, confident decisions. Without it, systems are opaque, errors go undetected, and data-driven analysis becomes unreliable.

Because if you don’t know how your information flows, where it comes from, or what it means, you’re building your insights on uncertain ground. And in the world of data, that’s a risk no organization can afford.

In recent years, the volume, variety, and velocity of data have pushed traditional data integration methods to their limits. Today’s increasingly complex ecosystems demand more than just moving data from point A to point B, they require a deep understanding of its origin, meaning, transformation, and data governance.

In short, data management has become a growing challenge, and metadata has emerged as the most obvious solution.

The New Era of Data Management: A Complete Guide

Learn how to improve data management in cloud environments and apply best practices in data governance.

Metadata has evolved from a technical afterthought into the backbone of intelligent, scalable, and resilient data integration architectures. It enables organizations to build trust in their data, reduce complexity, and unlock the full potential of modern analytics.

What Is Metadata and Why Is It Essential to Data Integration

At its core, metadata is “data about data”, a deceptively simple definition that holds deep conceptual value. Within the context of data integration, cross-system interoperability, and modern data architecture, metadata describes:

- Where the data comes from (origins)

- Where it goes (destinations)

- How it is transformed

- What rules and logics are applied

- What impact each modification has

- Who accesses, when and for what purpose

Without metadata, integration systems become opaque, rigid, and prone to error. But when metadata is properly managed, those same systems become transparent, traceable, and adaptable.

The term metadata was first said in 1969 by Jack E. Myers, long before it became a cornerstone of modern data architecture. Myers described metadata as the codified knowledge embedded within a data system: information that defines and explains other information, beyond the data itself.

- Want to dive deeper? Read our article: Metadata: Meaning, Types of Metadata and Metadata Management

Metadata: The Secret Ingredient Behind Sustainable Data Integration

Metadata is like the secret ingredient that brings everything together. You can have the ingredients (the data), the tools (your platforms), and the heat (your infrastructure), but without metadata, the final result lacks structure, meaning, and reliability.

In data integration processes, metadata provides essential insight: it reveals what data is being processed, how it's transformed, why specific rules are applied, and what impact each action has along the pipeline. It enables traceability, context, and control over the entire flow of information.

When managed effectively, metadata plays a crucial role in ensuring data quality, identifying and preventing errors, supporting audits, fostering collaboration across teams, and maintaining compliance with data protection and governance regulations.

Metadata in Action: Integration, Traceability and Control

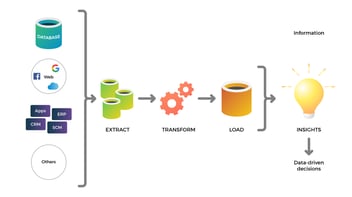

In practice, metadata plays a critical role in data integration. It enables organizations to:

- Trace the complete data lineage of any data point—from its origin to its final use.

- Ensure quality and consistency across ETL and ELT processes.

- Detect errors, validate transformations, and enforce data controls.

- Meet regulatory requirements by logging processes, user access, and data transformations.

- Drive automation across modern integration platforms.

In today’s data landscape, simply having data is no longer enough. What truly matters is understanding where that data comes from, how it evolves, and what purpose it serves.

And to achieve that level of insight and control, metadata is no longer a nice-to-have, it’s a foundational requirement.

From Traditional Integration to Metadata-Driven Architecture

For years, data integration relied on ad hoc solutions: custom-built pipelines, business logic embedded in scattered code, and a heavy dependence on the tacit knowledge of a few individuals.

Every change was costly. Integrating a new system meant redesigning processes from scratch. Errors —silent yet persistent— accumulated unnoticed. The result? Fragile infrastructures, poor scalability, and millions lost to inefficiencies and unreliable data.

In response to these limitations, a new paradigm has emerged: metadata-driven data integration.

In this approach, metadata is no longer treated as a technical by-product—it becomes the driving force behind data integration processes. Transformation logic is not hardcoded; it’s defined through metadata: mappings, business rules, orchestration steps. A specialized engine interprets this metadata and executes the necessary operations automatically.

Development is replaced by configuration, unlocking agility, transparency, and maintainability. This shift changes everything.

A metadata-driven integration framework enables organizations to:

- Automate data processing tasks

- Scale integration workflows with ease

- Reuse mappings and logic across projects

- Evolve systems without rewriting code

- Democratize access to data and integration logic

Metadata-Driven Data Integration: Tangible Benefits That Matter

The shift to a metadata-driven approach isn’t just theoretical—it delivers concrete, measurable advantages:

- Standardization: Consistent application of rules across all sources and processes.

- Reusability: Design once, apply many times—accelerating development cycles.

- Automation: Less manual effort, faster execution, fewer human errors.

- Scalability: Easily onboard new data sources and destinations without disrupting existing pipelines.

- Simplified maintenance: Update the metadata—not the code—when changes are needed.

- Cross-functional collaboration: Business users can participate in defining logic without writing code.

- Agility: Quickly adapt to new requirements, formats, or regulatory needs without friction.

And above all, it enables greater confidence in the data, a critical foundation for making reliable, data-driven decisions.

Core Components of a Metadata-Driven Integration Architecture

A modern metadata-driven integration framework typically rests on three components that work together to enable dynamic, scalable, and maintainable data pipelines:

1. Metadata Repository: The System’s Source of Truth

The metadata repository serves as the central hub of a metadata-driven architecture. It stores and organizes all the critical information related to integration processes, including data models, inter-system mappings, transformation rules, configurations, data quality policies, audit logs, and operational parameters.

But its purpose goes far beyond documentation. The metadata repository functions as the single source of truth from which data flows are executed and governed. A robust repository should be centralized, easily accessible, and capable of consolidating metadata from both batch and real-time integration pipelines. Ideally, it should also integrate with other key components of the data ecosystem—such as governance frameworks, data quality tools, and metadata catalogs.

Still documenting your data and metadata manually? There's a better way.

Governance for Power BI

is a specialized solution that combines two powerful capabilities:

- Comprehensive activity tracking in Power BI, with no time, user, or workspace limits, delivering a complete and detailed history of user interactions.

- Automated dataset documentation, including business and functional descriptions that improve understanding and accessibility for non-technical users.

2. Metadata Management Tool: A No-Code Interface for Technical and Business Users

The metadata management tool provides the interface for creating, editing, validating, and maintaining metadata—without writing a single line of code. It empowers both technical teams and business users to define mappings, apply transformation rules, document data flows, and manage version control, all through an intuitive, no-code environment.

The most advanced solutions go a step further: they integrate machine learning algorithms capable of suggesting transformations, detecting inconsistencies, and validating data quality automatically. This dramatically reduces operational effort while improving consistency and trust in the data.

Leading platforms in this space include Azure Purview, Unity Catalog, Talend Metadata Manager, Informatica Metadata Manager, and Alation—each offering robust capabilities for data cataloging, traceability, and governance, seamlessly integrated with broader data management workflows.

3. Data Integration Engine: Dynamic and Automated Execution

The data integration engine is the component responsible for executing the processes defined in the metadata. It handles the movement, transformation, and orchestration of data according to preconfigured rules—without requiring manual coding for each individual pipeline.

This layer allows teams to define logic once in the metadata repository, and let the engine interpret and execute it dynamically. The result: faster deployment, fewer errors, and greater consistency across integration workflows.

This capability is supported by powerful tools such as Azure Data Factory, Apache Airflow, AWS Glue, Matillion, DBT, and Databricks. In addition, highly automated platforms like Fivetran and Stitch natively follow this metadata-driven model, further simplifying implementation and reducing technical overhead.

Use Cases Where Metadata Makes a Real Difference

Metadata-driven data integration frameworks are far from theoretical—they’re already being used successfully in real-world scenarios where complexity, scale, and traceability demand a smarter approach. Here are just a few examples where this model shines:

1. Migrating from Legacy to Modern Architectures

Moving from legacy platforms to modern cloud environments or data lakes often requires a complete restructuring of models, transformation logic, and data flows.

When metadata is used as the foundation, organizations can define reusable mappings, document the full data lineage of migrated assets, and ensure consistency between source and target systems.

The result: faster, auditable migrations with reduced risk of information loss.

2. Integrating Multiple and Heterogeneous Data Sources

In many organizations, data lives across a wide range of systems—relational databases, flat files, APIs, cloud storage, or legacy platforms.

A metadata-driven framework acts as an abstraction layer, enabling seamless connection, normalization, and consolidation of data across all these sources—without needing custom code for each integration.

- You may be interested in: What is the Medallion Data Architecture?

3. Democratization and Self-Service for Business Users

One of the biggest challenges in scaling data operations is reducing dependency on technical teams for routine tasks.

With metadata-driven integration, business users can reuse existing pipelines and adapt them simply by configuring new mappings or targets—without writing any code.

This unlocks true self-service BI, accelerates decision-making, and reinforces a data-driven culture across the organization.

4. Real-Time Integration with Metadata-Defined Rules

Streaming data flows —common in operational, financial, and digital marketing environments— require a high degree of flexibility and adaptability.

By defining schemas, transformations, and validations as metadata, organizations can build real-time pipelines that are not only more robust, but also easier to update on the fly—without system downtime. This approach also enhances traceability, making it easier to monitor changes and maintain compliance even in dynamic, high-volume scenarios.

5. Enabling Data Fabric and Composable Architectures

In data fabric initiatives, where data must be made available in multiple formats and destinations across a distributed ecosystem, metadata acts as the connective tissue that enables cross-system orchestration.

It allows organizations to apply standardized rules, promote reusability, and ensure that information flows securely and consistently across heterogeneous systems—while maintaining governance, auditability, and semantic coherence.

8 Key Principles for Designing a Metadata-Driven Data Integration Framework

A successful metadata-driven architecture isn’t just about tools—it's about following a set of foundational principles that ensure long-term scalability, clarity, and business alignment. Here are the eight key pillars:

- Metadata as the Foundation: Metadata is not a secondary component—it is the single source of truth for your integration logic and data governance strategy.

- Standardization: Using standardized metadata models across all integration processes ensures interoperability between systems and simplifies maintenance.

- Business Orientation: Metadata must reflect the language and logic of the business—not just technical schemas—so it can be understood and used by all stakeholders.

- Tool Integration: The framework should seamlessly connect with other parts of the data ecosystem: quality checks, data modeling, governance workflows, and visualization tools.

- Agility: It should be easy to adapt to new data sources, structures, and business requirements without rewriting core logic.

- Automation First: Maximize automation to reduce manual tasks, accelerate delivery, and free up time for high-value analysis.

- Clear Governance: Define clear roles, responsibilities, policies, and procedures for managing metadata across the organization.

- Performance Measurement: Establish KPIs to monitor the impact of metadata on data quality, reliability, efficiency, and user adoption.

Conclusion: Know Your Metadata, Know Your Data

If there's one clear takeaway from this exploration, it's that metadata is the invisible thread that holds modern data integration together. It’s no exaggeration to say that the success—or failure—of a data platform hinges on how metadata is understood, managed, and leveraged.

A metadata-driven approach transforms how organizations work with data: shifting from improvisation to intentional design, from disjointed processes to governed systems, and from isolated technical work to true collaboration between business and IT.

In today’s data landscape, raw data alone holds limited value. Data only becomes an asset when it is clearly described, properly mapped, and accurately transformed. And none of that is possible without robust metadata.

Because to truly know your data, you must first know your metadata.

Otherwise, you're operating in the dark.

The New Era of Data Management: A Complete Guide

Learn how to improve data management in cloud environments and apply best practices in data governance.